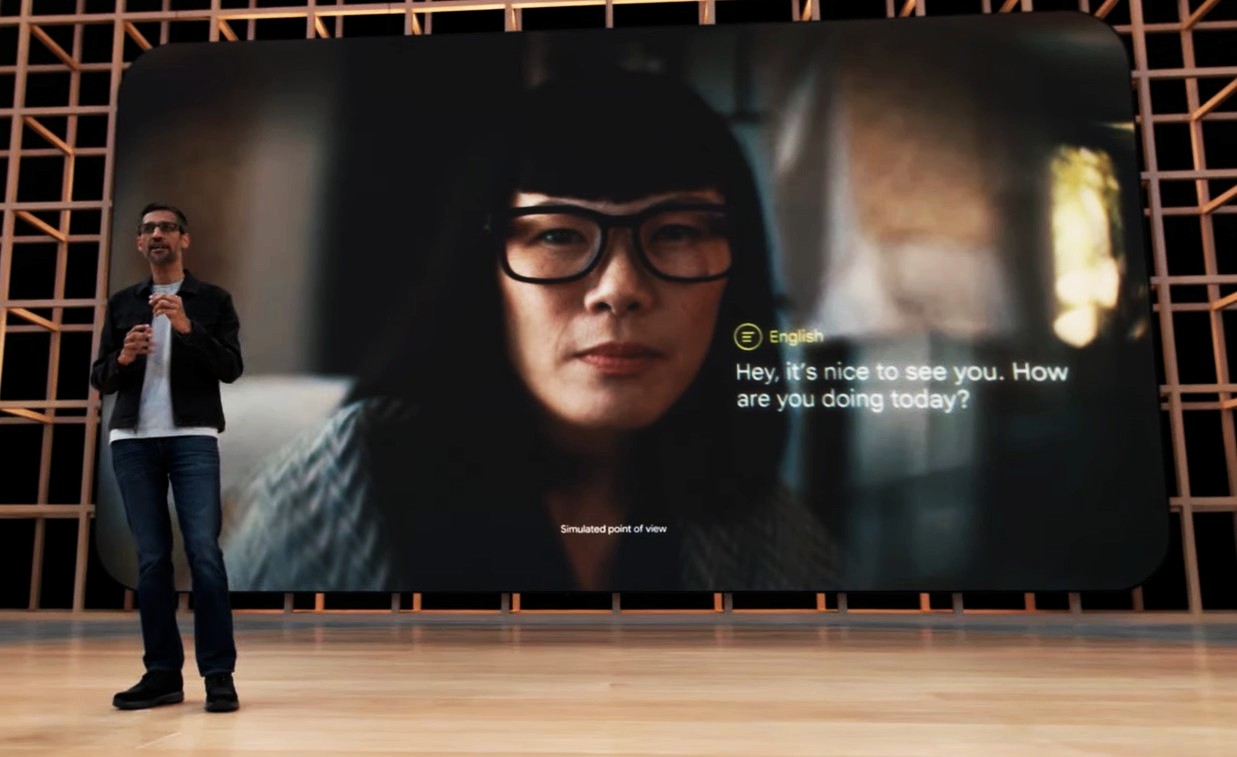

Augmented Language was featured in the Google I/O 2022 Keynote. Live translation of speech in everyday glasses

has the potential to make language more universally accessible and understandable.

"Let's see what happens when we take our advances in translation and transcription, and deliver them in your line-of-sight in one of the early prototypes that we have been testing." (Sundar Pichai, CEO Google) |

SpeechCompass: Enhancing Mobile Captioning with Diarization and Directional Guidance via Multi-Microphone Localization

Dementyev, A., Kanevsky, D., Yang, S., Parvaix, M., Lai, C., and Olwal, A.

Proceedings of CHI 2025 - Best Paper Award (Top 1%) (ACM CHI Conference on Human Factors in Computing Systems), Yokohama, Japan, Apr 26-May 1, 2025, pp. 1-17.

CHI 2025 - Best Paper Award (Top 1%)

|

![PDF []](images/pdf.gif)

|

InstructPipe: Generating Visual Blocks Pipelines with Human Instructions and LLMs

Zhou, Z., Jin, J., Phadnis, V., Yuan, X., Jiang, J., Qian, X., Wright, K., Sherwood, M., Mayes, J., Zhou, J., Huang, Y., Xu, Z., Zhang, Y., Lee, J., Olwal, A., Kim, D., Iyengar, R., Li, N., and Du, R.

Proceedings of CHI 2025 - Best Paper Honorable Mention Award (Top 5%) (ACM CHI Conference on Human Factors in Computing Systems), Yokohama, Japan, Apr 26-May 1, 2025, pp. 1-22.

CHI 2025 - Best Paper Honorable Mention Award (Top 5%)

|

![PDF []](images/pdf.gif)

|

Wearable Subtitles: Augmenting Spoken Communication with Lightweight Eyewear for All-day Captioning

Olwal, A., Balke, K., Votintcev, D., Starner, T., Conn, P., Chinh, B., and Corda, B.

Proceedings of UIST 2020 - Best Demo Honorable Mention Award (ACM Symposium on User Interface Software and Technology), Virtual Event, Oct 20-23, 2020, pp. 1108-1120.

UIST 2020 - Best Demo Honorable Mention Award

|

![PDF [16MB]](images/pdf.gif)

|

Quantifying The Effect of Simulator-Based Data Augmentation for Speech Recognition on Augmented Reality Glasses

Arakawa, R., Parvaix, M., Lai, C., Erdogan, H., and Olwal, A.

Proceedings of ICASSP 2024 (IEEE International Conference on Acoustics, Speech and Signal Processing), Seoul, South Korea, Apr 14-19, 2024, pp. 726-730.

ICASSP 2024

|

![PDF [2.4MB]](images/pdf.gif)

|

ChatDirector: Enhancing Video Conferencing with Space-Aware Scene Rendering and Speech-Driven Layout Transition

Qian, X., Tan, F., Zhang, Y., Collins, B., Kim, B., Olwal, A., Ramani, K., and Du, R.

Proceedings of CHI 2024 (SIGCHI Conference on Human Factors in Computing Systems), Honolulu, HI, May 11-16, 2024, pp. 1-16.

CHI 2024

|

![PDF [2.3MB]](images/pdf.gif)

|

Visual Captions: Augmenting Verbal Communication with On-the-fly Visuals

Liu, X, Kirilyuk, V., Yuan, X, Olwal, A., Chi, P., Chen, X., and Du, R.

Proceedings of CHI 2023 (SIGCHI Conference on Human Factors in Computing Systems), Hamburg, Germany, Apr 23-28, 2023, pp. 1-20.

CHI 2023

|

![PDF [30MB]](images/pdf.gif)

|

Modeling and Improving Text Stability in Live Captions

Liu, B., Zhang, J., Ferrer, L., Xu, S., Bahirwani, V., Smus, B., Olwal, A., and Du, R.

CHI 2023 Extended Abstracts (SIGCHI Conference on Human Factors in Computing Systems), Hamburg, Germany, Apr 23-28, 2023, pp. 1-9.

CHI 2023

|

![PDF [3MB]](images/pdf.gif)

|

|

|